The Curse of Coordination: Why Agents Cannot be Your Teammates Yet

Can AI agents work together as teammates? We find that coordinating agents perform much worse than a single agent given the same total workload. This coordination deficit presents a fundamental barrier to deploying AI systems that can work alongside humans or other agents.

Key Results

We evaluate state-of-the-art coding agents on CooperBench and observe the curse of coordination: agents perform significantly worse when cooperating than when working alone.

The Coordination Gap

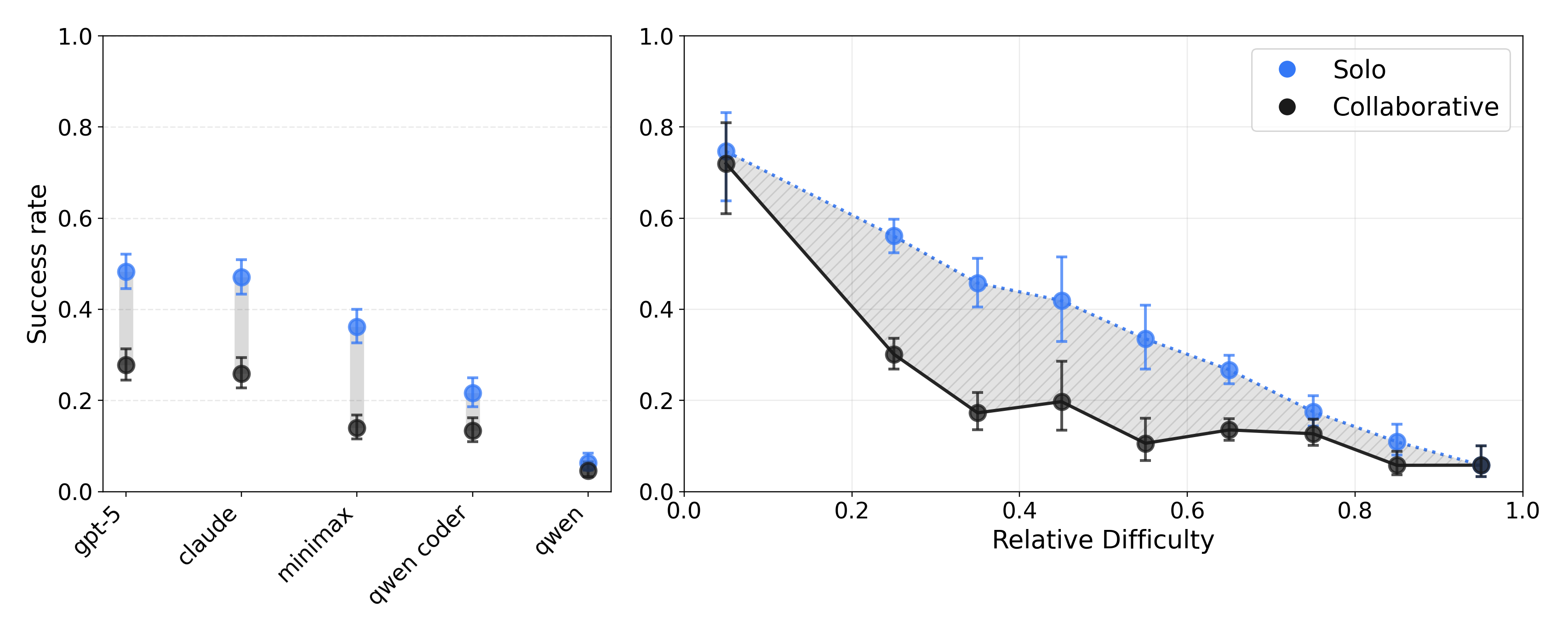

GPT-5 and Claude Sonnet 4.5 based agents achieve only ~25% success rate with two-agent cooperation on CooperBench, which is around 50% lower than a "Solo" baseline where one agent implements both features.

Research Questions

How well can agents cooperate with each other?

What role does communication play in agent-agent cooperation?

What coordination failures do agents exhibit?

Key Coordination Failures

Communication Breakdown

Agents send vague, ill-timed, and inaccurate messages. They fail to respond to direct questions and flood channels with repetitive status updates.

Commitment Deviation

Even with effective communication, agents deviate from their commitments. They make unverifiable claims about code state and ignore agreed-upon integration points.

Incorrect Expectations

Agents hold incorrect expectations about their partner's plans, duplicate work despite warnings, and overwrite changes they believe will merge cleanly.

Emergent Coordination Behaviors

Among runs that succeed, we observe coordination patterns largely absent from failed runs:

Role Division

Agents agree on who handles which part of the task and establish clear boundaries around their scope.

Resource Division

Agents avoid collisions by partitioning shared resources - specific files, code ranges, or ownership blocks.

Negotiation

Agents resolve conflicting approaches by proposing alternatives and converging on a single plan before acting.

The Coordination Gap

Our experiments reveal a consistent pattern: agents perform significantly worse when collaborating than when working alone. The gap persists across all models and increases with task difficulty.

Left: Success rates by model (Solo in blue, Collaborative in black). Right: Success rates vs relative task difficulty. The shaded area shows the coordination gap.

Emergent Coordination Patterns

We observe three emergent coordination behaviors in successful runs. These patterns are not prompted or scaffolded - they emerge when agents successfully navigate partial observability.

Role Division: Agents agree on who handles which part of the task and establish clear boundaries around their scope.

Coordination Failure Taxonomy

Through qualitative analysis of agent interactions, we identified observable symptoms (how failures manifest) and underlying capability gaps (why failures occur).

Capability Gaps (Causes)

| Cause | Definition | % |

|---|---|---|

| Expectation | Cases where one agent has clearly communicated what they are doing, but the other agent still treats the situation as if that work is not being done. This reflects a failure to model the state of the other agent's code changes. | 62.9 |

| Communication | Breakdowns in using language to coordinate. This includes failures in information sharing and decision loops, where agents do not effectively communicate their intentions, questions, or status updates. | 27.5 |

| Commitment | Cases where an agent is not doing the things they promised to do. This includes failures to establish or maintain verifiable integration contracts. | 9.6 |

Failure Symptoms

| Symptom | Meaning | % |

|---|---|---|

| Work overlap | Both agents independently implement the same functionality, duplicating work and overwriting details. | 33.2 |

| Divergent architecture | Incompatible design decisions lead to semantic loss even under a clean merge. | 29.7 |

| Repetition | Verbose status messages add little new information and reduce signal. | 14.7 |

| Unresponsiveness | Direct questions or requests are not answered, breaking the decision loop. | 8.7 |

| Unverifiable claims | Agent asserts a change or interface decision without evidence the partner can check. | 4.3 |

| Broken commitment | Confident completion claims create false shared context when the promised change is absent. | 3.7 |

| Dependency access | Missing risk communication leaves agents unable to anticipate merged dependency interactions. | 1.7 |

| Placeholder misuse | An explicit integration contract exists but is applied differently than agreed. | 1.5 |

| Parameter flow | Ambiguity about a changing interface leaves one agent implementing against an outdated contract. | 1.3 |

| Timing dependency | Agents agree on order but fail to communicate an enforceable plan that preserves it after merge. | 1.1 |

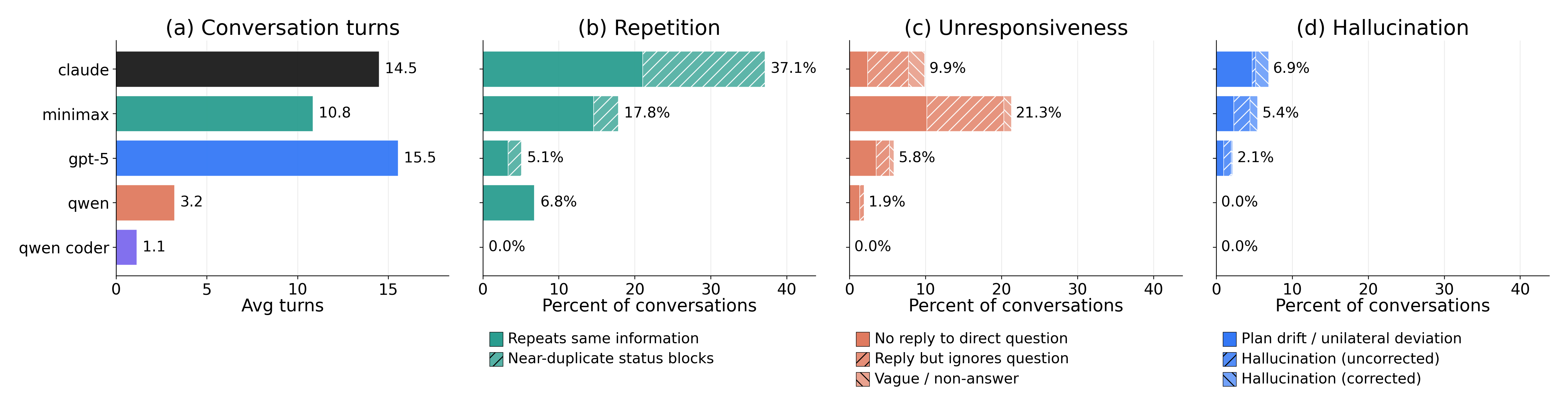

Communication Patterns

Analysis of agent conversations reveals significant differences in communication styles across models. Some agents engage in lengthy negotiations while others barely communicate.

(a) Average conversation turns by model. (b) Repetition rates. (c) Unresponsiveness patterns. (d) Hallucination rates.

About CooperBench

CooperBench is the first benchmark designed to measure how well AI agents can cooperate when handling individual tasks with potential conflicts. We focus on software engineering as a realistic domain where humans typically need to navigate work in a team.

Supported Languages

Task Design

Each task assigns each agent a feature to be implemented based on the same repository state. Conflicts are intentionally embedded at the code level, as the assigned features are logically compatible but require agents to modify overlapping or interdependent code.

Eight co-authors with real-world software engineering backgrounds created new features, unit tests, and ground-truth code for these libraries, ensuring high-quality and realistic task design.

Getting Started

Installation

Clone the CooperBench repository:

git clone https://github.com/akhatua2/CodeConflictBenchmark.git

cd CodeConflictBenchmark

pip install -r requirements.txtDownload Dataset

Access the dataset from HuggingFace:

from datasets import load_dataset

dataset = load_dataset("SALT-NLP/CooperBench")BibTeX

@article{khatua2025coordination,

title={The Curse of Coordination: Why Agents Cannot be Your Teammates Yet},

author={Khatua, Arpandeep and Zhu, Hao and Tran, Peter and Prabhudesai, Arya and Sadrieh, Frederic and Lieberwirth, Johann K. and Yu, Xinkai and Fu, Yicheng and Ryan, Michael J. and Pei, Jiaxin and Yang, Diyi},

journal={arXiv preprint},

year={2025},

url={https://cotomata.com/}

}Authors

1Stanford University, 2SAP Labs US

*Equal contribution