Can AI agents work together as teammates? We find that coordinating agents perform much worse than a single agent given the same total workload. This coordination deficit presents a fundamental barrier to deploying AI systems that can work alongside humans or other agents.

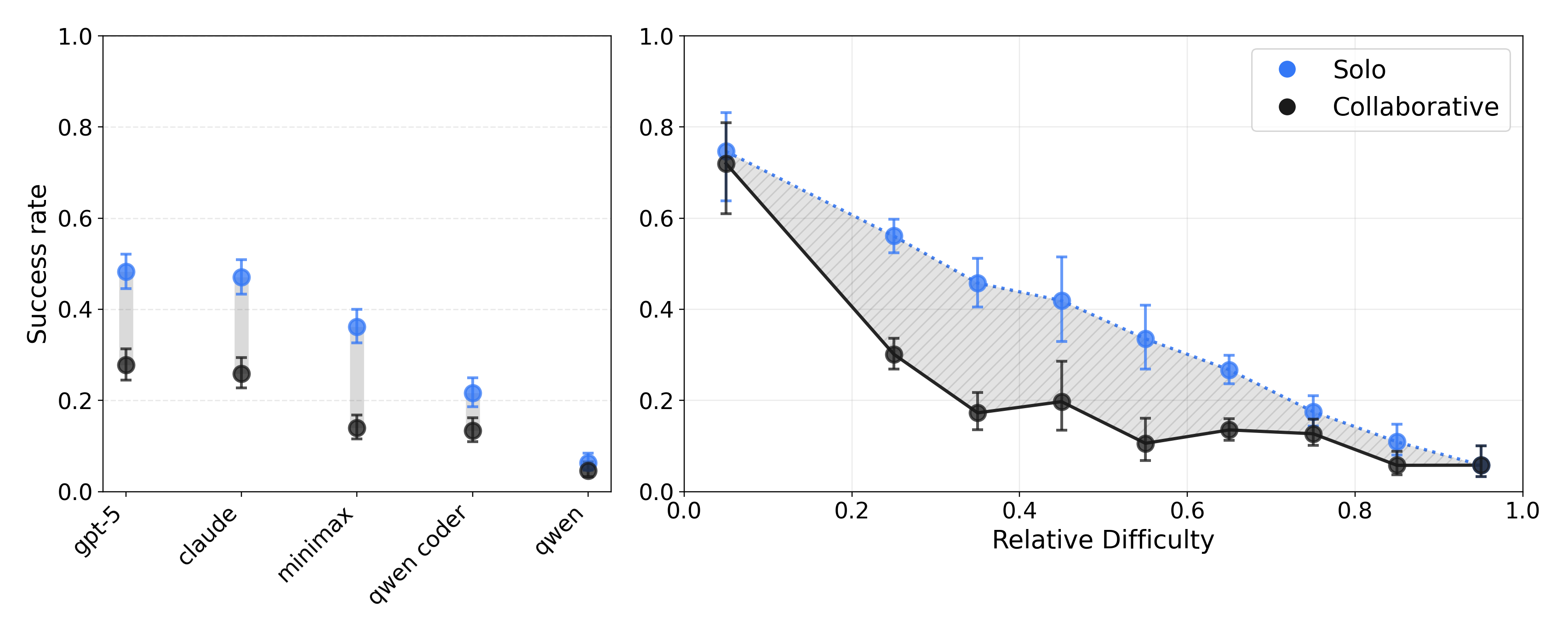

Left. Success rates by model. Solo (blue) consistently exceeds Coop (black). Right. The coordination gap is largest for medium-difficulty tasks.

Key Findings

1. Agents perform worse together than alone

GPT-5 and Claude Sonnet 4.5 achieve only 25% success with two-agent cooperation, roughly 50% lower than when a single agent handles both tasks. This gap persists across all models and task difficulties.

2. Communication reduces conflicts but not failures

Agents spend up to 20% of their budget on communication. This reduces merge conflicts but does not improve overall success. The channel is jammed with repetition, unresponsiveness, and hallucination.

3. Three capability gaps underlie coordination failures

Even when agents communicate well, coordination breaks down due to:

- Expectation failures (63%) where agents fail to integrate information about partner state

- Communication failures (28%) where questions go unanswered, breaking decision loops

- Commitment failures (10%) where agents break promises or make unverifiable claims

Emergent Coordination Patterns

Among successful runs, we observe coordination patterns largely absent from failures. These patterns are not prompted or scaffolded.

Role Division — Agents agree on who handles which part of the task. One agent delegates: "I'll add header + octal_str; you add binary_str between them."

CooperBench is the first benchmark designed to measure how well AI agents can cooperate when handling individual tasks with potential conflicts. We constructed 652 tasks from 12 popular open-source libraries across Python, TypeScript, Go, and Rust.

Each task assigns two agents different features that can be implemented independently but may conflict without proper coordination. Eight co-authors with real-world software engineering backgrounds created new features, unit tests, and ground-truth code.

Getting Started

git clone https://github.com/cooperbench/CooperBench.git

cd CooperBench

pip install -r requirements.txtCitation

@article{cooperbench2026,

title={CooperBench: Why Coding Agents Cannot be Your Teammates Yet},

author={Khatua*, Arpandeep and Zhu*, Hao and Tran, Peter and Prabhudesai, Arya

and Sadrieh, Frederic and Lieberwirth, Johann K. and Yu, Xinkai

and Fu, Yicheng and Ryan, Michael J. and Pei, Jiaxin and Yang, Diyi},

journal={arXiv preprint},

year={2026},

url={https://cooperbench.com/}

}Authors

Stanford University & SAP Labs · *Equal contribution